Researchers at MIT have shown us an example of how AI-based image recognition software can be hacked. How could this give us a new perspective on computer vision?

In an ironic twist of fate, it seems like hackers could play mind games with image recognition AI software.

Computer vision software like Google’s Cloud Vision API is pretty complex, as one might imagine, but it isn’t immune to hacking. Recently, researchers at MIT have proven that by reaching past its defenses in a unique way.

However, this was a strictly ‘white hat’ affair, and it was fortunate that it was MIT who figured out the hack. However, it brings to mind an important question as AI software becomes more and more advanced.

In the future, will we be able to depend upon AI enough to let them drive, shop, or babysit for us?

The question would seem crazy if we didn’t know people were working on technology capable of filling those roles. They are, though, and that means that hacking could present a major security risk.

The risk hasn’t gone unnoticed by developers. If a big company like Google is working on a project, you can rest assured that they have good protection put in place.

So to get to the bottom of this latest vulnerability in computer vision software, let’s take a closer look at MIT’s hack.

Hacking the ‘Black box’ of Computer Vision

Google’s Cloud Vision API scans pictures and can pick out different objects therein.

Optimally, the software can tell you what is in the picture, whether it is a dog, a person, or even what the temperature outside is. This is a valuable skill for AI to have, especially if we want them to do things such as drive our cars.

It’s valuable, but it is also critical, which made it a good target for MIT’s research team.

The researchers worked under what is known as “black box” conditions. They could see the AI’s output, but they had no knowledge of the inner workings of the software. The conditions were more difficult, but success came in gradual steps.

Using the output from the AI’s computer vision, the team slowly changed an image pixel by pixel. After about 1 million queries, they had discovered just how to convince the AI that it was looking at something radically different.

For example, they convinced Cloud Vision that a picture of two skiers was a dog.

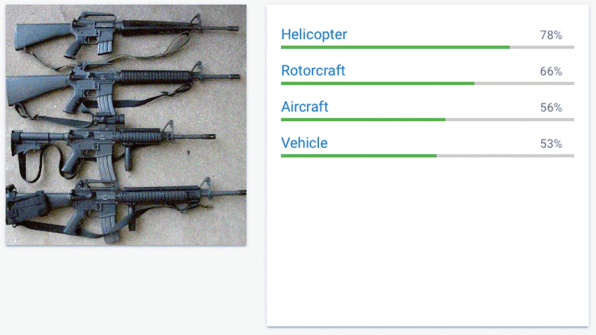

They also made Cloud Vision think that a row of guns was a helicopter.

So basically, the researchers subjected the AI to the software equivalent of gaslighting.

Who would have thought that you could gaslight image recognition software? #noAIabuseClick To TweetThis development definitely puts the future of computer vision into perspective. Far from being the end, this is a sign that we need to start taking tech like this very seriously. It has to be secure if it is going to have a future, and we very much want it to have a future.

Could This Stunt the Growth of Image Recognition?

Deep learning neural networks are inspired by what we know of our own brains. To get AI software to do the things we want it to do, we have to teach it to do incredibly complex things.

Some of these things are more complex than you might think which includes image recognition.

Researchers in private companies and universities around the world are working on improving a computer’s ability to see and recognize things. It’s one of the few things standing between us and the automation of many of life’s more mundane activities.

There is a bright future for automation, but that future could prove to be a moving goalpost if exploitation of the technology becomes simpler.

Rest assured, all of those aforementioned researchers don’t want to see that happen. The fact that MIT’s experiment even took place is a testament to that.

According to one of the researchers at MIT who worked on the hack, Anish Athalye, “We want to make sure the good guys can fix these things before the bad guys end up exploiting them.”

In reality, it’s almost impossible to think that any image recognition software will ever be 100% un-hackable, but that doesn’t mean that it will be easy to hack. With white hat researchers like the ones at MIT, there’s hope that any critical vulnerabilities will be caught before they get exploited.

Comments (0)

Most Recent