DeepMind has introduced a new AlphaGo program and this time, it’s a far superior Go player than the last iteration.

DeepMind, Google’s artificial intelligence arm, just unveiled the latest version of its AlphaGo program, the AlphaGo Zero.

According to reports, this new Go-playing AI is so powerful that it actually beat the old AI program version 100 games to zero.

It was last year when AlphaGo gained worldwide popularity for its victory over Lee Sedol, a South Korean Go professional player and world champion. The AlphaGo AI beat Lee four games to one.

Before beating Lee, the older AlphaGo version has already defeated three-time European Go champion Fan Hui in a five-game match.

After a year in hibernation, the Go-playing AI is making a comeback. This time, it’s smarter and more independent!

@DeepMindAI just unveiled its new Alpha Go program, Alpha Go Zero #AI Click To TweetNew AlphaGo Program: The Birth of AlphaGo Zero

According to a paper published in Nature, the new AlphaGo Zero has already achieved superhuman performance, winning 100 games to zero against the previous program which defeated Lee.

“Starting tabula rasa, our new program AlphaGo Zero achieved superhuman performance, winning 100–0 against the previously published, champion-defeating AlphaGo.” ~ Deepmind

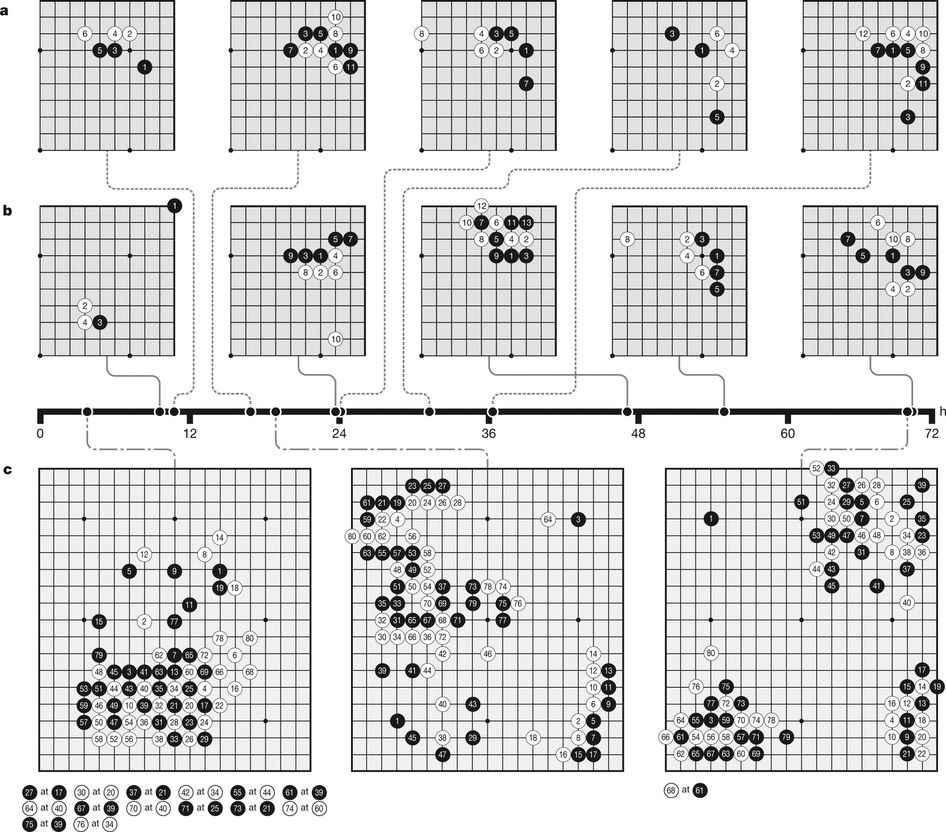

As compared to its predecessor, AlphaGo Zero trained itself for three days, acquiring thousands of years of human Go knowledge by playing itself. The researchers explained in their paper:

“The tree search in AlphaGo evaluated positions and selected moves using deep neural networks. These neural networks were trained by supervised learning from human expert moves, and by reinforcement learning from self-play.”

From there, the DeepMind team introduced an algorithm that they said was based solely on “reinforcement learning, without human data, guidance or domain knowledge beyond game rules.”

“AlphaGo becomes its own teacher: a neural network is trained to predict AlphaGo’s own move selections and also the winner of AlphaGo’s games. This neural network improves the strength of the tree search, resulting in higher quality move selection and stronger self-play in the next iteration,” they went on to say.

If you’re not aware of it, the original AlphaGo program has already demonstrated superhuman abilities. It used a dataset of over a hundred thousand Go games to learn the game. However, the AlphaGo Zero is on another level–and the researchers just equipped it with the basic rules of Go. Nothing more.

Yes, you read that right. No other information. Just the basics.

From there, Zero developed its skills by competing with itself. Every time it wins, the AI updates its record of moves and outcomes then resumes playing itself. It went on and on, a million times over.

Three days of playing gave Zero the capability to defeat the older AlphaGo version. After just 40 days, it was recorded to achieve a 90 percent winning rate against what DeepMind researchers deemed before as the ‘most advanced version’ of the Go-playing AI.

In a press conference, AlphaGo Zero lead programmer, David Silver, was quoted as saying:

“By not using human data — by not using human expertise in any fashion — we’ve actually removed the constraints of human knowledge. It’s therefore able to create knowledge itself from first principles; from a blank slate […] This enables it to be much more powerful than previous versions.”

So this AlphaGo is a somewhat “unsupervised” system. It looks like that really worked. Check out this article that talks about true AI systems that are completely unsupervised.

Silver further said that the new AlphaGo program was able to hit upon a number of well-known patterns and variations on its own during its self-play. It actually led to the AI developing never-before-seen strategies.

“It found these human moves, it tried them, then ultimately it found something it prefers,” Silver added.

Aside from being a smarter player over its predecessor, the new AlphaGo program has other advantages. For instance, it uses less computing power because it runs on just four Tensor Processor Unit (TPU) chip, Google’s cloud computing hardware and software system designed specifically for machine learning–a far cry from the old version which used 48 TPUs!

AlphaGo Zero: A Major AI Achievement

The capability of AlphaGo Zero to learn on its own is deemed by experts in the field as a significant AI development. A rebuttal to a classic criticism of the contemporary AI tech citing that majority of its knowledge was only gained from “cheap computing power and massive datasets.”

This latest research study from DeepMind only proves that its possible to achieve more machine learning improvements by simply focusing on algorithms.

Ilya Sutskever, research director of OpenAI, told The Verge:

“This work shows that a combination of existing techniques can go somewhat further than most people in the field have thought, even though the techniques themselves are not fundamentally new. But ultimately, what matters is that researchers keep advancing the field, and it’s less important if this goal is achieved by developing radically new techniques, or by applying existing techniques in clever and unexpected ways.”

On a separate statement, Noam Brown, a Carnegie Mellon University computer scientist who helped develop an AI to defeat human in poker echoed the same sentiments. He said:

“While the original AlphaGo managed to defeat top humans, it did so partly by relying on expert human knowledge of the game and human training data. That led to questions of whether the techniques could extend beyond Go. AlphaGo Zero achieves even better performance without using any expert human knowledge. It seems likely that the same approach could extend to all perfect-information games [such as chess and checkers]. This is a major step toward developing general-purpose AIs.”

DeepMind’s latest achievement is considered by the company itself as a small move towards realizing its ultimate goal of building a general-purpose algorithm that could have many real-world applications.

Comments (0)

Most Recent