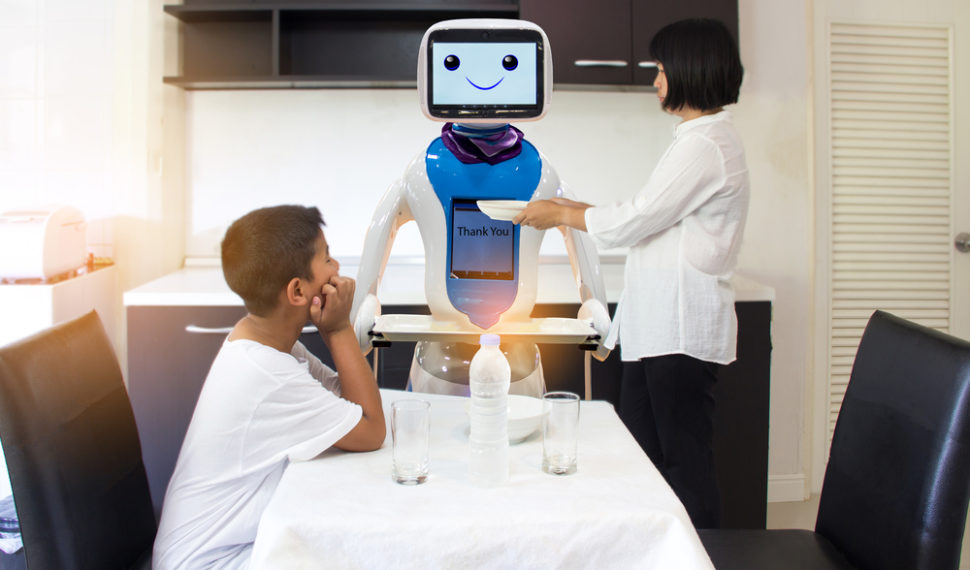

Training robots to do what they have to do requires technical guidance so that they would act accordingly, right? It may seem easy, but it’s more complicated than it sounds.

Instructing robots to accomplish what you want them to do is about teaching them how to understand the task they are asked to fulfill.

There are different ways to make autonomous robots understand what you want, like setting user preferences, or showing them what to do and how to do it via demonstrations.

Stanford researchers actually combined the two, demos and preferences, into one system that’s more efficient at training autonomous systems than either of the methods alone.

Training Robots Better, Faster, Smarter

Imagine you set a racing car in a video game to optimize for speed, and you see it speeding crazily in circles. Now, imagine the same but with a real-world autonomous vehicle.

Taking the sharp difference in consequences between the two scenarios aside, if the cars aren’t explicitly instructed to drive in a straight line, nothing will prevent them from not doing so.

It’s from examples like this one that Dorsa Sadigh, an assistant professor of computer science and electrical engineering at Stanford, and her lab teammates thought of an efficient system that could set goals for robots.

This new system for training robots called reward functions is an ingenious way of providing instruction to robots. It combines demos that involve humans physically showing the robot what to do, and user preference surveys, which contain the answers people give to questions about the robot’s mission.

Sadigh explained:

“Demonstrations are informative but they can be noisy. On the other hand, preferences provide, at most, one bit of information, but are way more accurate. Our goal is to get the best of both worlds, and combine data coming from both of these sources more intelligently to better learn about humans’ preferred reward function.”

Read More: 9 (Mostly) Household Robots That Will Change the Future

The present research builds on previous work by Sadigh and her colleagues, where they focussed on user preference surveys alone. They deemed the preference-based method too slow, so they come up with a way to speed it up. Demonstrations alone don’t work because the robot “often struggles to determine what parts of the demonstration are important.”

The team finally found that a combination of demos and preference surveys is the best way to train robots to interact with humans safely and efficiently.

Robots trained based on this combination process performed better than when they relied on one system alone, and this in both simulations and real-world experiments.

“Being able to design reward functions for autonomous systems is a big, important problem that hasn’t received quite the attention in academia as it deserves.”

The Stanford team presented their work at the Robotics: Science and Systems conference last June 24th.

Comments (0)

Most Recent