A team of researchers has developed a new technology that uses brainwaves and hand gestures to control robots.

Researchers from the Massachusets Institute of Technology‘s Computer Science and Artificial Intelligence Laboratory (CSAIL) created a system that can reshape how we could control robots someday. That is by correcting robot mistakes instantly just by using brain signals or the flick of our fingers.

According to reports, the new technology can determine in real time if a person sees any error as a robot performs its task. An interface that measures muscle activity would allow a person to use hand gestures to scroll through and choose the right action that the robot must execute.

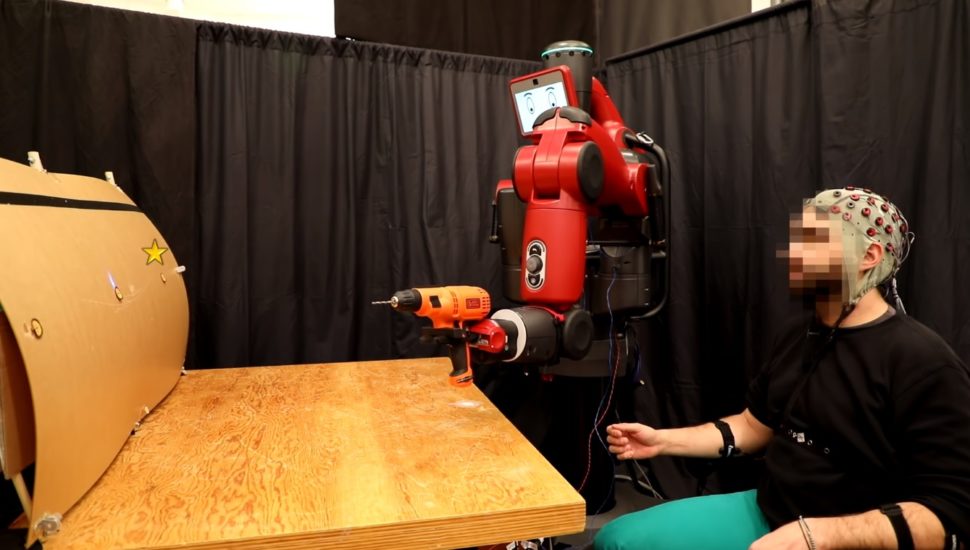

The team reportedly utilized the power of electroencephalography (EEG) for observing brain activity and electromyography (EMG) for the muscle activity. The two machines are responsible for sending the electrodes that run through the user’s forearm and scalp. Combining the two machine metrics allowed the researchers to develop a more robust bio-sensing technology.

“This work combining EEG and EMG feedback enables natural human-robot interactions for a broader set of applications than we’ve been able to do before using only EEG feedback,” Daniela Rus, CSAIL director, said in a statement. “By including muscle feedback, we can use gestures to command the robot spatially, with much more nuance and specificity.”

The team was able to demonstrate the capabilities of its system to control robots by allowing a robot to move a power drill to one of the three potential targets on the body of a mock plane. Rus’ team reportedly harnessed the power of brain signals known as error-related potentials to let a user correct the mistakes of the robot.

“What’s great about this approach is that there’s no need to train users to think in a prescribed way. The machine adapts to you, and not the other way around,” Joseph DelPreto, lead author of the researchers’ paper, went on to say.

“By looking at both muscle and brain signals, we can start to pick up on a person’s natural gestures along with their snap decisions about whether something is going wrong,” he added. “This helps make communicating with a robot more like communicating with another person.”

The team hopes that their latest innovation could one day be used to improve the lives of the elderly or workers with limited mobility and language disorder.

Comments (0)

Most Recent