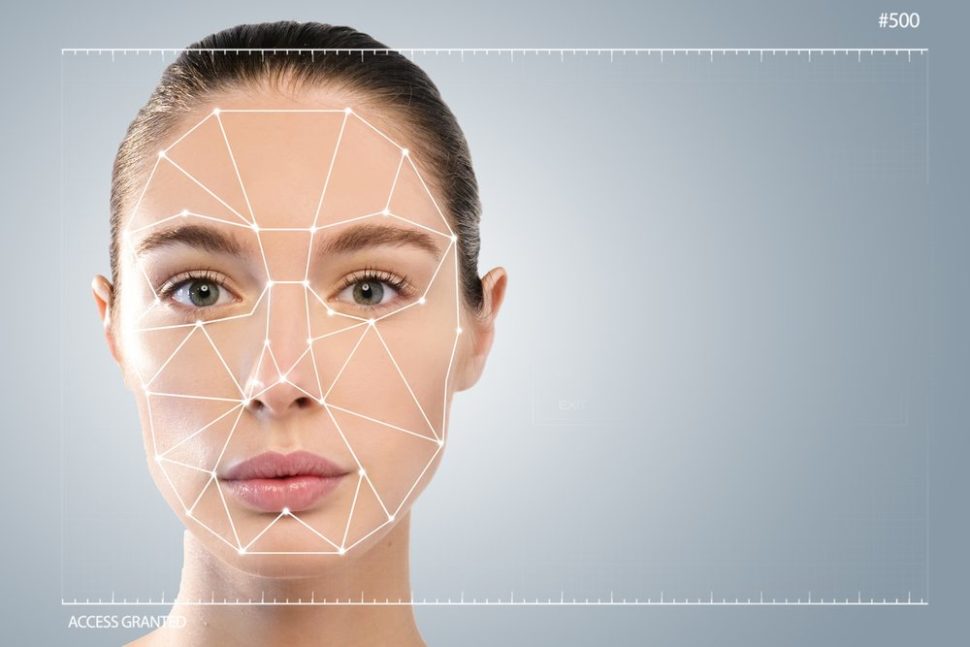

Facial recognition software is becoming more and more prevalent in law enforcement. However, the failure rates of these devices are still drastically high.

Facial recognition software seems to grow more and more ubiquitous each year. You can unlock your phone with your face, but you can also potentially get unjustly charged with a crime.

Last year, we wrote about how facial recognition AI could help identify disguised criminals. But now, many police departments have false positive rates of more than 90%.

Why is police facial recognition so good at getting the wrong culprit?

Mixed Reports From Various Outlets Across the Globe

On May 10th, 2018, NPR asked if the U.S. would invest in real-time facial recognition.

The article asserts that “Facial recognition accuracy rates have jumped dramatically in the last couple of years, making it feasible to monitor live video.” The author links to coverage of a Chinese breakthrough in the technology.

But most other sources on facial recognition software signal a bleak outlook.

London cops have made zero arrests with only two correct matches for a false positive rate of 98%. That means that innocent people are being falsely identified as criminals.

This month, The Guardian elaborated in a long-form article as to why something with such a horrible success rate could persist.

The reason may be that facial recognition technology doesn’t have real regulations yet. Camera surveillance and biometric data both have regulatory frameworks. But British Parliament has not yet discussed the issue of facial recognition technology.

In fact, before this report got released, Engadget reported on 2,300 people misidentified as criminals by South Wales Police technology. The unit explained the results, asserting that “poor quality images” and the newness of the technology caused the false positives.

How Could the Technology get it so Wrong?

Generally, automated facial recognition technology is not passive like a closed circuit television system. It works in real time to map people’s faces to match them to images in other databases.

Regarding the particular instance The Guardian addressed, police tech used a specific database comprised of people at November’s Remembrance Day commemorations in Whitehall.

Those assembled engaged in lawful protests against public figures. Activists voiced their concern that this kind of AI-based bias threatens human civil rights.

But, similarly to data privacy laws in the U.S., legislation has not kept up with technology.

This has not deterred other global projects from continuing onward with the technology. After all, China is already making leaps and bounds with their implementation of facial recognition surveillance.

They claim that their system could identify 40 facial features, regardless of angle or lighting. The system can then reportedly make a successful and accurate match with a 99.8% accuracy rate.

In a perfect world, where the technology always works and is infallible, it can protect us.

But, just as we have laws to protect us from government wiretapping or self-incrimination, perhaps we need laws protecting us from inaccurate facial recognition results.

Endemic Issues Won’t Stop Technology use

Despite concerns, police departments around the world plan to expand their facial recognition technology usage.

China will incorporate sunglasses with built-in facial recognition beyond initial operations in Beijing. The U.S. Army announced in April 2018 that they were developing a version of this tech that works in the dark.

Oh, it apparently will recognize faces through walls, as well, so that’s cool.

The US is already paving the way towards an advanced surveillance program. Today, the faces of half of all American adults are already available for governmental surveillance. Some airports scan every single person who walks through their doors.

As more faces get scanned and more places incorporate facial recognition technology, we may see more police departments respond like the South Wales Police: with ambivalence.

It is not their fault that the facial recognition AI returned false positives. It is the fault of the AI creators because, as we have covered, human programmers can impart their prejudices onto their neural network creations.

But moreover, the godfather of AI says that backpropagation isn’t the way toward true AI.

Perhaps the fail of facial recognition technology exemplifies just how much the tech world needs to revisit their approach not just to advanced surveillance, but to the idea of true AI.

Comments (0)

Most Recent