Over a year after developing the text-generating GPT2 AI model, OpenAI has now introduced another AI model that creates images.

Back in February 2019, OpenAI revealed that it had developed an artificial intelligence model capable of making fake news. Called GPT2, the said model can allegedly produce relevant-sounding text from any prompt.

Meaning, GPT2 can be fed with a portion of any human-generated text like news articles or journals. Using statistical methodologies, it will attempt to guess the next words in the said text prompt, spin them, and generate coherent sentences.

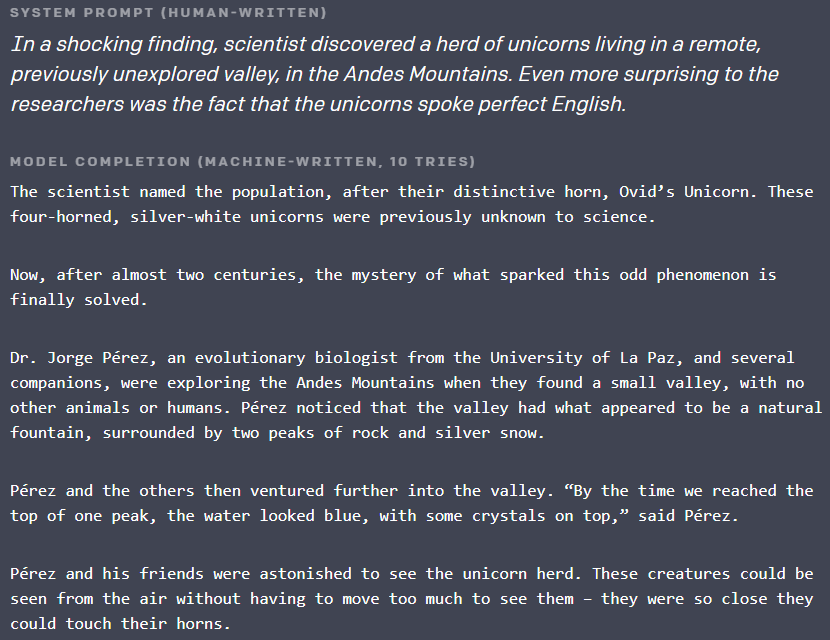

Below is a screenshot of the text that GPT2 generated out of a human-written prompt.

This time, however, the company’s researchers explored what would happen if the GPT2 AI model is to be fed with incomplete images.

GPT2 AI Model Learned to Generate Images

The GPT2 AI model is a prediction engine. It was taught how the English language structure works using billions of words, paragraphs, and sentences taken from every corner of the internet. With that structure, and by using statistical prediction, GPT2 learned to rearrange words and create disturbingly coherent sentences.

But now, OpenAI researchers replaced the words with pixels and trained the AI model using ImageNet images. According to the researchers, the new model they called iGPT (image GPT) successfully learned the two-dimensional structures associated with images. That’s despite initially being designed to work on one-dimensional data.

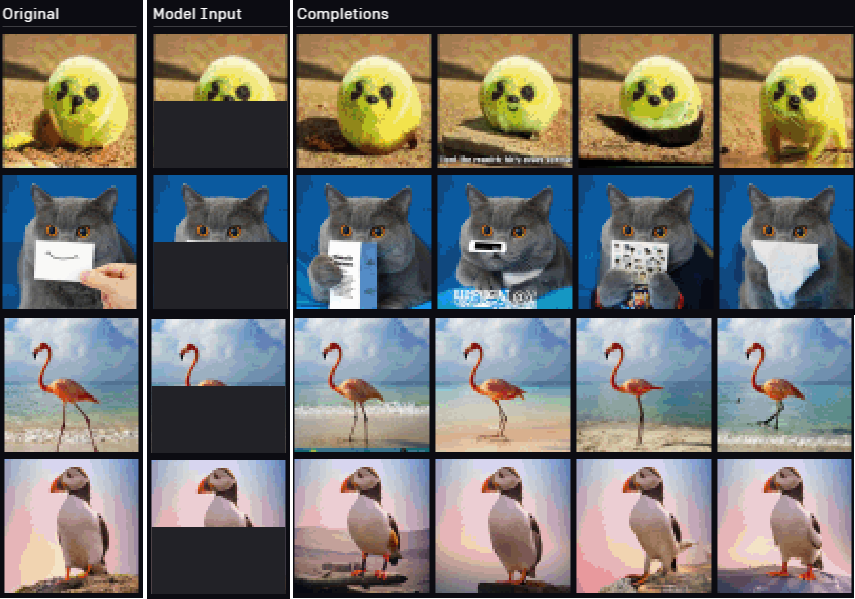

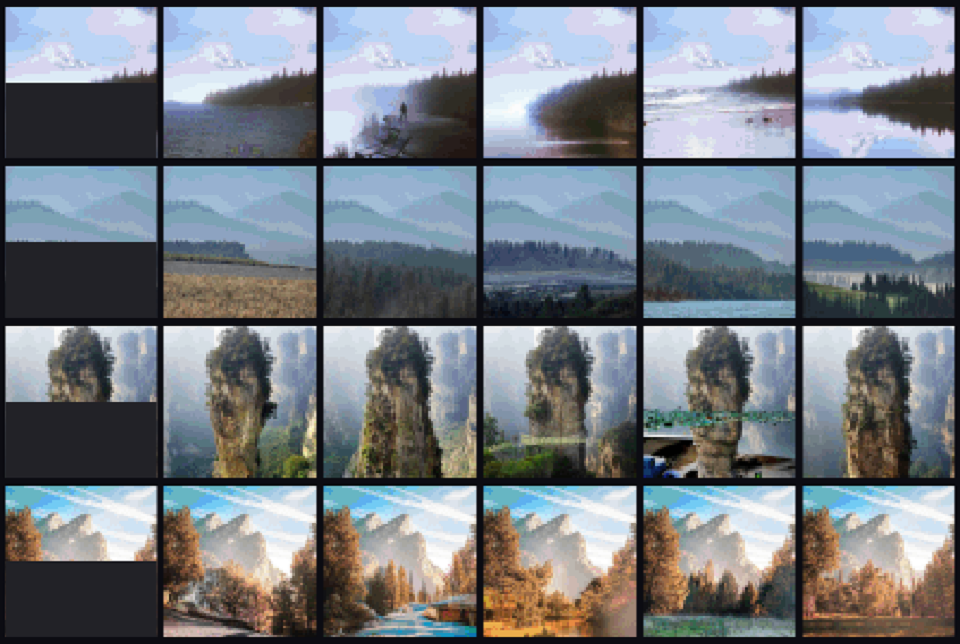

When tested, the scientists found that iGPT could predict the sequence of pixels for the other half of an incomplete image in the same manner as humans. The following are some sample images shared by OpenAI.

According to the researchers, despite iGPT’s capability to learn powerful image features, the approach has its limitations. For instance, it requires significant amounts of computation power. Furthermore, it needs a more advance transformer to be efficient when it comes to low-resolution images.

Another limitation that the researchers mentioned is that iGPT could exhibit biases depending on the data that it was trained on.

“Many of these biases are useful, like assuming that a combination of brown and green pixels represents a branch covered in leaves, then using this bias to continue the image. But some of these biases will be harmful when considered through a lens of fairness and representation. For instance, if the model develops a visual notion of a scientist that skews male, then it might consistently complete images of scientists with male-presenting people, rather than a mix of genders.”

These limitations, according to the researchers, prevent convolutional neural network-based methods from having real-world applications; particularly in the vision domain.

If you want to know more about iGPT, you can read the team’s paper here.

Comments (0)

Most Recent