Humans have been waging wars on each other since the dawn of History.

In war, civilizations have always strived to create new weapons with increasing lethality: from clubs, spears, swords, and arrows, to guns and bombs, to nuclear warheads and other mass destruction weapons.

Warfare is now on the cusp of ushering in a new age, that of autonomous robots.

Current military robotics systems, like drones, always have a human somewhere calling the shots. But as technology advances, we delegate more and more decisions to machines, including the ability to take out human lives.

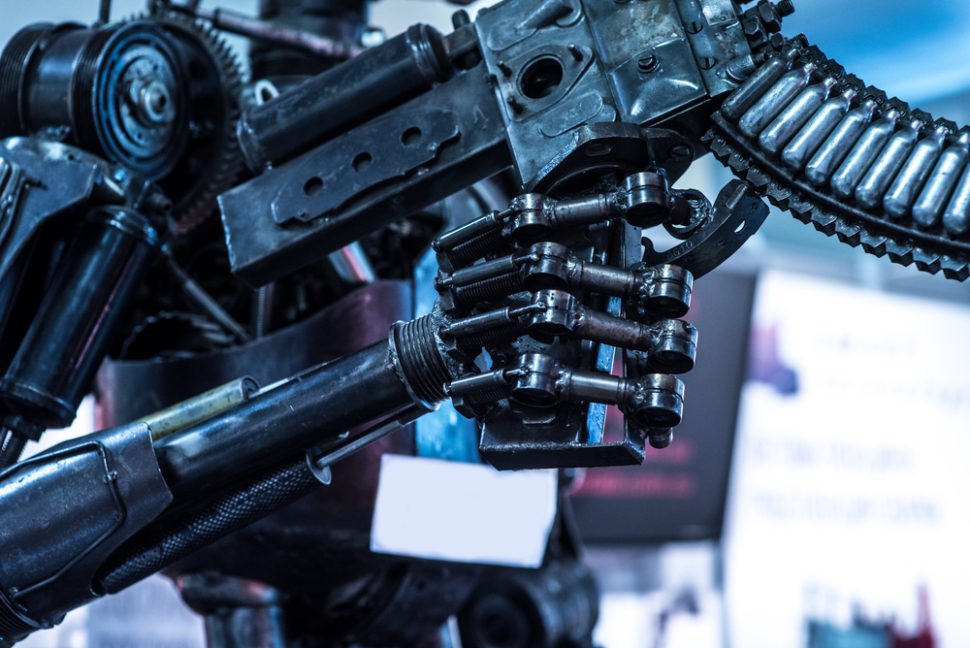

Lethal autonomous robots, or “killer robots”, are AI-powered weapon systems that make their own decisions about who to find, target, and kill on the battlefield.

Preemptive Strike Against Killer Robots

In 2013 the coalition Campaign to Stop Killer Robots was launched by dozens of NGOs to work for retaining “meaningful human control over the use of force.”

The same year, Human Rights Watch (HRW) and the Harvard Law School Human Rights Clinic sent a memorandum to the Convention on Conventional Weapons calling for a new law to ban fully autonomous robotic weapons.

Six years have passed and the only thing that seems to be advancing is autonomous weapons technologies.

The Martens Clause was introduced to the 1899 Hague Convention II regarding the laws and customs of war on land.

The clause stipulates that in cases not covered by international treaties, belligerent parties should respect a minimum of humanitarian principles to protect people directly affected by armed conflicts.

Human Rights Watch considers the use of fully autonomous weapons as a breach to international humanitarian laws and reiterates its call for a preemptive ban on killer robots.

“There is an urgent need for states, experts, and the general public to examine these weapons closely under the Martens Clause,”

Robots can’t feel compassion and empathy and lack any legal and ethical judgment to make them capable of making the right decisions in a theatre of war.

Read More: Elon Musk Leads Coalition Asking UN to ban Lethal Autonomous Weapons

Last November, at a ceremony marking the 100th anniversary of the end of WWI, the UN Secretary-General called on “States to ban these weapons, which are politically unacceptable and morally repugnant.”

The global public opinion also seems aware of the potential risks of autonomous weapons. A recent study that surveyed people across 26 countries found that 61 percent oppose the use of such weapons.

Activists of the Campaign to Stop Killer Robots from 35 countries met in Berlin, Germany, to advocate for a treaty banning the development and use of killer robots.

A strong push to the resistance to rising killer robots can come from inside tech companies working on lethal autonomous weapons.

Last January, thousands of Google anonymous employees were collectively named the 2018 “Arms Control Person of the Year” because they pushed the company to end an AI-aided drone targeting system project for the Pentagon.

Google has then committed not to “design or deploy AI” for use in weapons or other applications that may cause overall harm.

Robots developers should used their passion for robotics to help the environment instead of creating a killer robots. This is really worth reading.

Deep water ROVs, Humanoid Robots, SWEEPER etc. these are the result of developers collaboration to fight against climate change.